Whitebox Assessment

The English user guide is currently in beta preview. Most of the documents have been automatically translated from the Japanese version. Should you find any inaccuracies, please reach out to Flatt Security.

This page explains the internal architecture of Takumi's whitebox assessment. For usage instructions, see Whitebox Assessment.

Overview

Takumi's whitebox assessment uses LLM-powered agents to discover vulnerabilities through static code analysis.

When an assessment begins, the repository containing the target code is cloned into a sandbox environment. Multiple LLM agents collaborate as a workflow, reading code through tools executed within the sandbox to search for vulnerabilities.

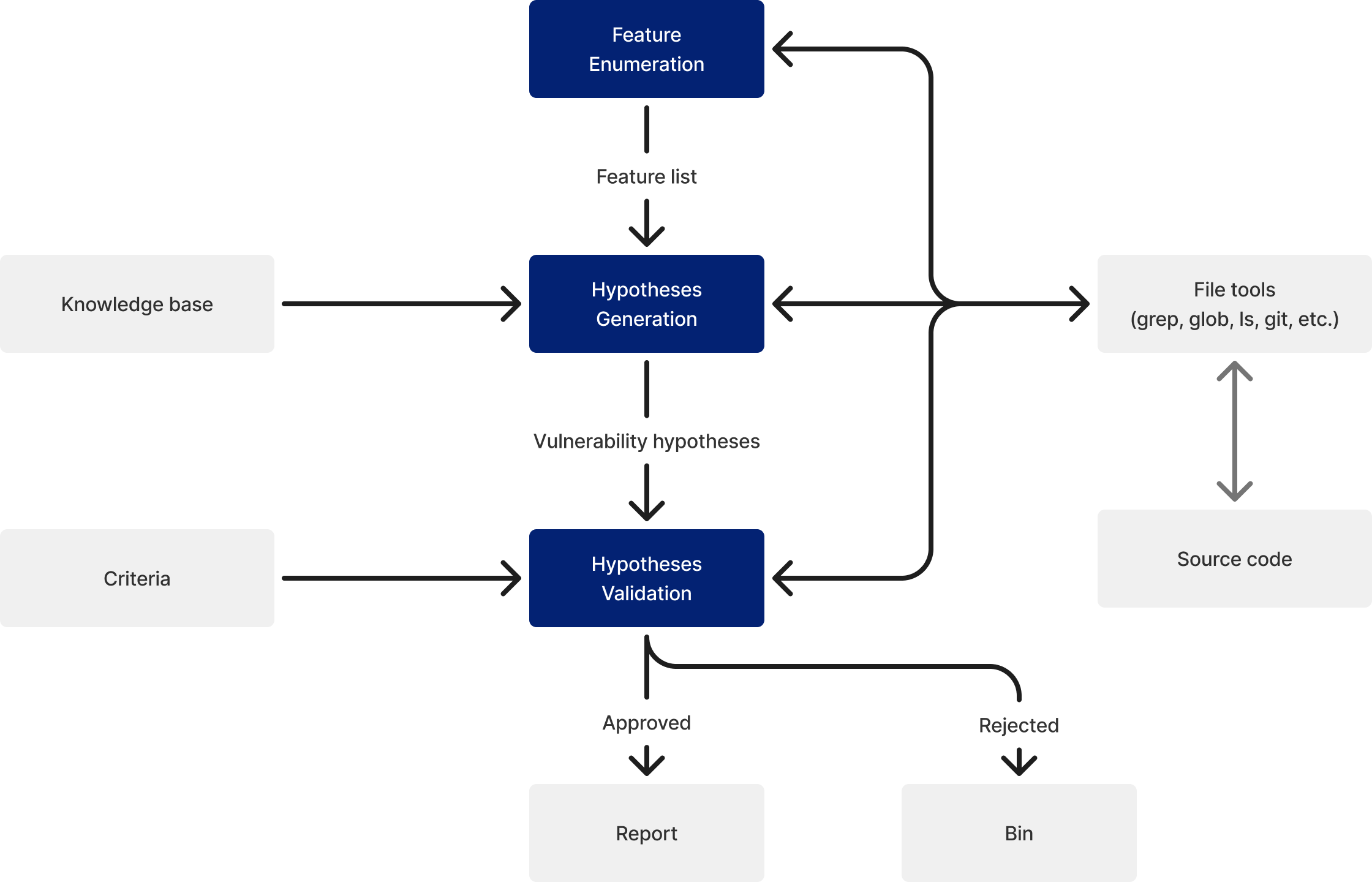

The role of each agent and the data exchanged between them are as follows:

- Feature Enumeration: The agent uses file browsing and search tools to analyze the code structure and outputs a list of features for systematically discovering vulnerabilities in subsequent stages

- Hypothesis Generation: The agent reads the code of each feature and outputs vulnerability hypotheses (locations of potentially vulnerable code and their rationale)

- Hypothesis Validation: The agent traces data flows in detail, referencing application-specific validation criteria, to determine whether each hypothesis is true or false

- Finally, a report is generated from the validated vulnerabilities

The sandbox environment is isolated per assessment. That is, the environment used for one assessment is never shared with another. This guarantees data isolation between different assessment targets.

Tools Used by Agents

Each agent uses a set of tools executable within the sandbox to autonomously explore the target code. These tools are broadly categorized into three purposes:

- File browsing and exploration: Reading file contents, listing directory structures, searching for files matching patterns, etc., to understand the codebase structure

- Code search: Full-text search using regular expressions to efficiently find specific function calls or patterns across the entire codebase

- Git: Referencing commit history and diffs to understand the history of code changes and recently modified areas

By combining these tools, agents autonomously perform tasks such as understanding the codebase structure, identifying relevant files, and tracing data flows—just as a human security engineer would.

Vulnerability Discovery Mechanism

The whitebox assessment uses a divide-and-conquer approach to comprehensively analyze the features of a codebase.

LLMs have an upper limit on the number of tokens they can process at once (context window), so it is not feasible to pass an entire large codebase for analysis. Therefore, the codebase is first divided into meaningful units called features, and vulnerability hypothesis generation and validation are performed independently for each feature. This allows each agent to focus on a limited scope of code at all times, enabling detailed analysis within the constraints of the context window.

Feature Enumeration

First, the target codebase is divided into feature units. This process consists of three phases.

Discovery of Exclusion Patterns

Files that are not important from a security assessment perspective are identified and excluded from scope. For example, the following types of files are excluded:

- Test files (

*_test.go,*_spec.rb, etc.), lock files, media files, documentation, and third-party code (node_modules/,vendor/, etc.) are excluded unconditionally - Additionally, project-specific exclusion patterns discovered by the agent are excluded (e.g., directories containing large amounts of auto-generated code, directories containing only data, etc.)

- Files explicitly designated as out-of-scope by the user are also excluded from the assessment

Feature Identification

The agent uses file browsing tools to analyze the code structure and identifies security-relevant features such as API endpoints, authentication handling, middleware, webhook handlers, etc. Each feature includes a name, summary, and a list of associated files.

Each time a file is assigned to a feature, it is reflected in the scope filter, allowing the agent to examine only unvisited files in subsequent exploration.

Prioritization

When a large number of features are identified, prioritization is performed based on threat modeling conducted by the agent. The agent explores the codebase, evaluates areas of high value to an attacker, assigns priority by feature type (e.g., authentication, payment, file upload), and concentrates assessment resources on high-priority areas.

Vulnerability Hypothesis Brainstorming

For each enumerated feature, the agent leverages security expertise to generate vulnerability hypotheses. The agent uses file browsing tools and Git tools to read the target code while constructing hypotheses. Emphasis is placed on high-impact vulnerabilities such as authentication/authorization bypass, SQL injection, XSS, SSRF, path traversal, and race conditions. On the other hand, items in categories that are difficult for attackers to exploit directly or that are typically addressed at layers other than code—such as missing security-related HTTP headers, lack of request rate limiting, and insufficient logging—are excluded from reporting.

Hypothesis Validation

The generated hypotheses are validated one by one. In this process, the agent uses file browsing tools and Git tools to re-analyze the code in detail and determines whether each vulnerability is valid based on the following perspectives:

- Data flow analysis: Identify the origin of external input and trace the entire path to the vulnerable code. Verify the presence or absence of sanitization and validation at each layer

- Security model alignment: Consider the application's intended users, functional specifications, and existing security measures. For example, XSS in a feature used only by administrators has different risk implications than XSS in a feature used by general users

- Application-specific validation criteria: Based on the threat model and permission model of the target application, determine whether the reported issue deviates from the application's intended specification

- Attack scenario concretization: Determine whether a concrete attack scenario (trigger input examples, vulnerable code locations) can be constructed, rather than an abstract vulnerability

Only hypotheses that pass validation are adopted as vulnerabilities documented in the final report.

Comparison with Non-LLM Tools

This section compares Takumi's agent-based approach with traditional static application security testing (SAST) tools.

Traditional SAST refers to tools that detect vulnerabilities by matching predefined rules (patterns) against source code. Representative examples include Semgrep and CodeQL.

Configuration Cost

Deploying traditional SAST requires the following setup work:

- Selecting appropriate rule sets for the target language and framework

- Customizing rules to match project-specific coding patterns

- Tuning rules and configuring exclusions to suppress false positives

For example, if you use a custom sanitization function from a proprietary framework, SAST will not recognize it as sanitization, resulting in false positives. Resolving this requires rule customization.

With Takumi, assessments can be started simply by specifying the codebase. Since the agent autonomously understands the code structure and framework conventions, no rule writing or tuning is required.

Discovery of Business Logic Vulnerabilities

Traditional SAST excels at detecting vulnerabilities that can be expressed as syntactic patterns in code, such as "user input is passed to an SQL query without sanitization."

However, the following types of vulnerabilities, which require understanding the meaning and context of the code, are difficult to detect:

- Missing authorization: An API endpoint handles a resource that "should be operable only by administrators" but does not implement authorization checks. Detecting this requires understanding the business rule that "only administrators should be able to operate on this resource," which cannot be determined from syntactic patterns alone

- Restriction bypass: In an e-commerce site's order finalization process, the order finalization function does not check payment completion as a precondition, so calling the API directly starts the shipping process without payment. This is not an issue with individual lines of code but with the management of preconditions across the entire workflow

With Takumi, agents analyze code with an understanding of its meaning, enabling them to discover such business logic vulnerabilities.

Execution Cost

Traditional SAST performs pattern matching and can execute quickly on standard computing environments. It is common for even large codebases to complete in minutes to tens of minutes.

Takumi, where agents read and analyze code incrementally, requires more execution time and credit consumption compared to traditional SAST. In particular, the larger the target codebase, the more features to inspect and hypotheses to generate, and thus the more execution time and credit consumption increase accordingly.

Reproducibility

Traditional SAST operates deterministically. Given the same code and rules, it always produces the same results no matter how many times it is executed.

Since Takumi uses LLMs, due to their probabilistic nature, assessment results for the same code may vary slightly between runs. A vulnerability discovered in one run may not be found in another run, or vice versa.