Blackbox Assessment

The English user guide is currently in beta preview. Most of the documents have been automatically translated from the Japanese version. Should you find any inaccuracies, please reach out to Flatt Security.

This page explains the internal architecture of Takumi's blackbox assessment. For usage instructions, see Blackbox Assessment.

Overview

Takumi's blackbox assessment uses LLM-powered agents that act like human security engineers, performing actual attacks against target web applications to discover vulnerabilities. The agents operate a browser and shell within a sandbox environment to communicate with the application, and discovered vulnerabilities are verified by deterministic verifiers (Oracles) that do not depend on LLMs.

The overall picture of an assessment is as follows:

- An agent acts like a human security engineer, operating a browser and shell within a sandbox environment to execute attacks against the target web application

- All communications from the browser and shell are routed through a proxy and recorded. Communication logs are used for analyzing attack results and for verification by Oracles

- A dedicated callback server is used to detect Out-of-Band (OOB) vulnerabilities such as SSRF

- Vulnerabilities discovered during the attack phase are verified by deterministic Oracles that do not depend on LLMs wherever possible, eliminating hypotheses of non-reproducible vulnerabilities

Environment Isolation

The sandbox environment is isolated per assessment. Each assessment runs within a dedicated VM, and browser profiles, proxy logs, and file systems are never shared with other assessments. This guarantees data isolation between different assessment targets.

Assessment Flow

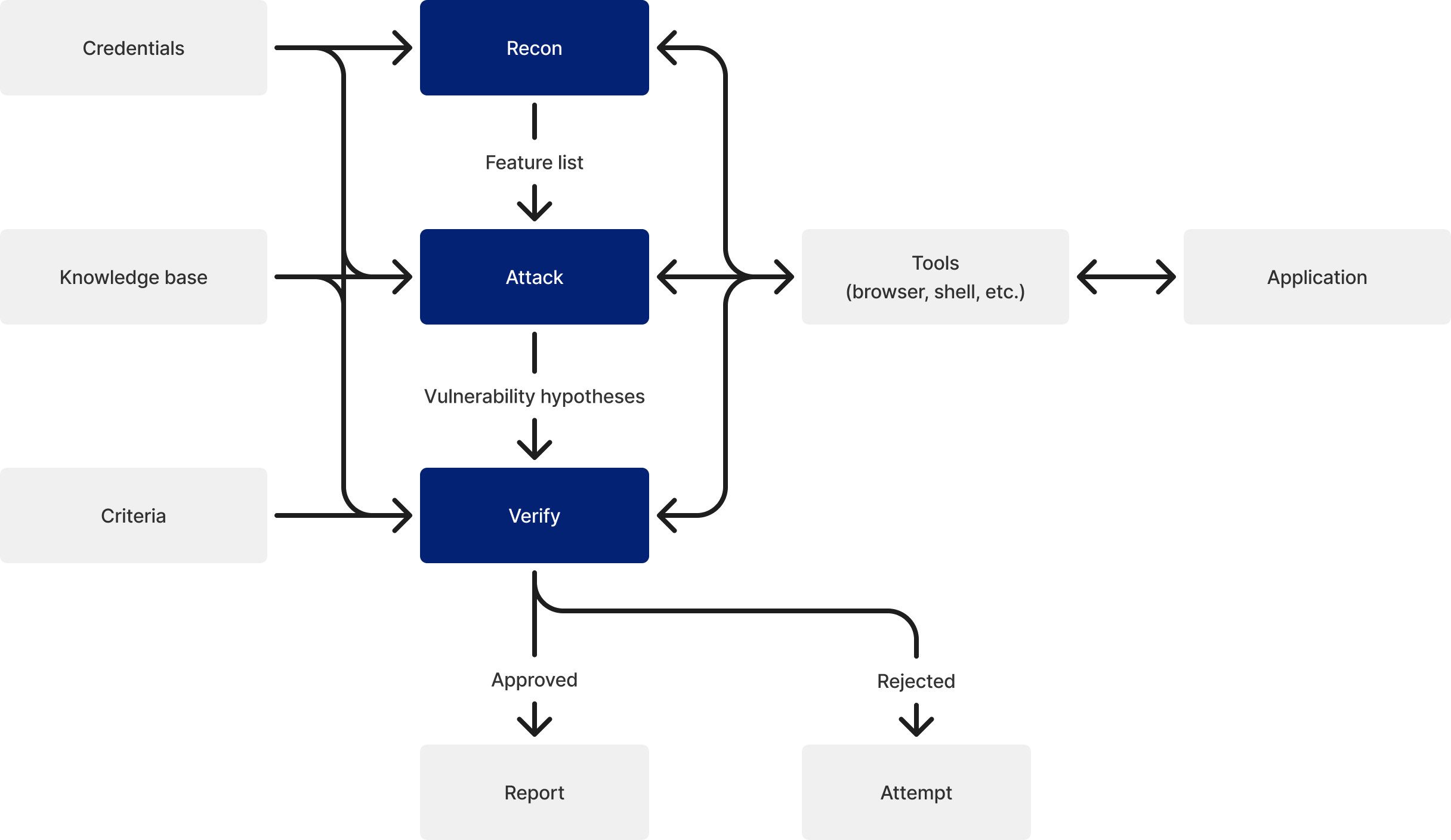

In a blackbox assessment, the agent executes three phases in sequence: Recon, Attack, and Verify.

Recon Phase

First, the agent navigates the target application using a browser and automatically discovers features. If authentication is required, the agent signs in using the provided credentials before navigating. The agent follows links and forms within the application to enumerate available features and classifies the characteristics of each feature (authentication-related, payment-related, API endpoint, etc.). Furthermore, the agent identifies endpoints associated with each feature (page URLs, API paths) and records request destinations to be tested in the subsequent attack phase.

Attack Phase

For each feature discovered in the recon phase, the agent combines vulnerability perspectives (injection, XSS, authorization flaws, etc.) and executes actual attacks. Most perspectives are applied to all features, but some perspectives are filtered based on feature characteristics. For example, clickjacking and CORS perspectives are applied only to top-level features, and authentication flaw perspectives are applied only to authentication-related features.

The agent uses tools executable within the sandbox environment to attack the target application. The main tools are as follows:

- Browser: Performs operations similar to an actual user, such as page navigation, form input, clicking, JavaScript execution, and cookie inspection

- Shell: Sends HTTP requests directly using curl, Python scripts, etc.

- Communication log search: Searches all communications recorded by the HTTP proxy and analyzes attack results

- Callback server: Detects communications from the application to external destinations, such as SSRF

- Knowledge base: References attack techniques (payloads, bypass methods, etc.) for each vulnerability perspective

The agent analyzes attack results and records discovered vulnerabilities as Findings. The recorded Findings undergo improvement suggestions and corrections by a separate agent before being reflected in the final report.

Vulnerability Verification Mechanism

Vulnerabilities discovered in the attack phase are deterministically verified using Oracles. This mechanism is designed to eliminate false positives (non-existent or non-reproducible vulnerabilities).

Verification Flow

Findings recorded during the attack phase are verified through the following three stages.

First, if the application's specification is provided, the Finding is checked against the application's intended behavior. For example, if the specification states that "administrators can view all users' data," such behavior confirmed during the attack phase is determined to be normal operation, not an access control flaw.

Next, the agent attempts to reproduce the vulnerability based on the reproduction steps described in the Finding. Using the same tools as those used in the attack phase (browser, shell, etc.), the agent re-confirms whether the vulnerability truly exists. If reproduction fails, the Finding is rejected at this stage.

Finally, Findings that were successfully reproduced are verified by the Oracle. Oracles are not LLMs but specialized programs prepared for each vulnerability type. For example, for XSS, the Oracle checks "whether a specific value was output to the browser console"; for SQL injection, it checks "whether there is a difference in response time caused by payloads that induce delays"—making objective, criteria-based determinations about the presence of vulnerabilities.

Oracle Types

Specialized Oracles are prepared for each vulnerability type. The following are examples of Oracles.

| Vulnerability Type | Verification Method |

|---|---|

| XSS | Detection of a canary value (unique number) output to the browser console |

| SQL Injection | Time-based verification (measuring response time difference between normal requests and delay-payload requests) |

| SSRF | Confirmation of OOB communication to the callback server |

| SSTI | Searching proxy logs for evaluation results of arithmetic expressions (e.g., the product of two numbers) |

| Path Traversal | Searching proxy logs for known patterns of file contents |

| Access Control (Read) | Comparing canary values between authorized and unauthorized user sessions to determine information leakage |

| Access Control (Write) | After a write operation by an unauthorized user, an authorized user confirms whether the change was applied |

| Open Redirect | Verifying whether the browser's redirect destination domain matches the attacker-specified domain |

| Clickjacking | Verifying the presence of X-Frame-Options and Content-Security-Policy frame-ancestors headers |

Final Determination

The final determination is made solely based on the Oracle's result. The agent's judgment does not affect the final outcome.

If the Oracle confirms the vulnerability, the Finding is reported as a vulnerability. If the Oracle cannot confirm the existence of a vulnerability, the Finding is rejected even if the agent operating during the attack phase judged it to be vulnerable.

This design structurally eliminates false positives reported by agents.

Comparison with Non-LLM Architectures

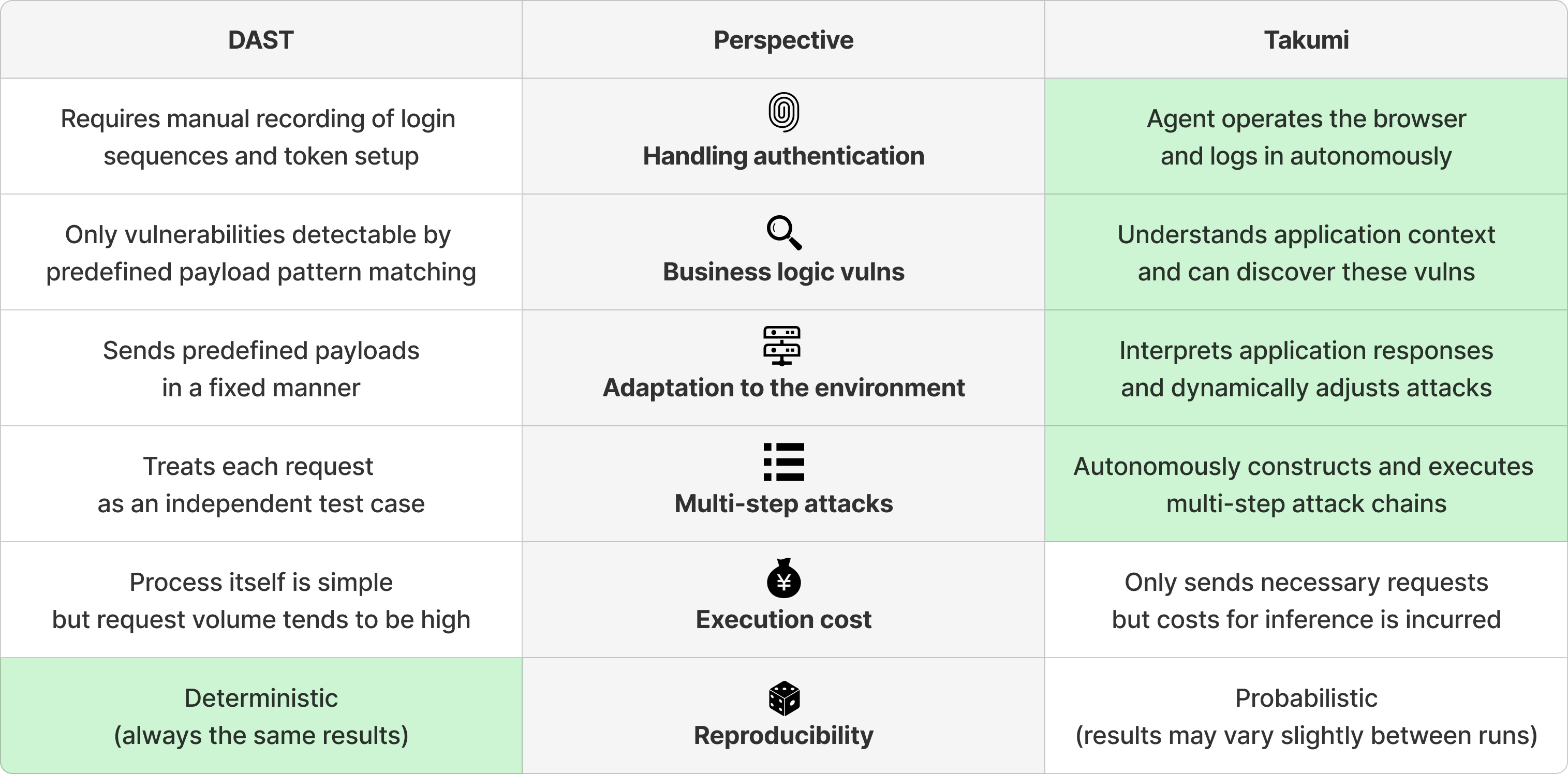

This section compares Takumi's agent-based approach with traditional dynamic application security testing (DAST) tools.

Traditional DAST refers to tools that detect vulnerabilities by sending predefined payloads to web applications and analyzing their responses. Representative examples include the scanning features of OWASP ZAP and Burp Suite.

Handling Authentication

Since many features of web applications are accessible only after authentication, passing through the authentication flow is a prerequisite for blackbox assessments.

To assess post-authentication screens with traditional DAST, the following preparation is required:

- Manually recording the login sequence (URL, parameters, request order)

- Manually obtaining a valid session token and configuring it in the scanner

- Configuring re-authentication logic for session expiration

With this approach, configuration updates are needed whenever the login form or login API specifications change. Additionally, automation itself becomes difficult for authentication flows that include one-time passwords or CAPTCHAs, and redirect-based authentication flows such as OAuth / SAML.

With Takumi's blackbox assessment, the agent operates the browser using the provided credentials and autonomously executes the login process. Since the agent understands and operates the on-screen UI, pre-recording of the login sequence is unnecessary. Even if the login UI or API structure changes, the agent adapts on the fly.

Discovery of Business Logic Vulnerabilities

Traditional DAST typically detects vulnerabilities by sending predefined payloads to input fields and pattern-matching the responses—for example, sending <script>alert(1)</script> to an input field and determining that XSS exists if it is reflected in the response as-is. This approach is effective for technical vulnerabilities such as SQL injection and XSS, but is difficult to apply to the following types of vulnerabilities that require understanding the application's context:

- IDOR (Insecure Direct Object Reference): If a user profile page URL is

/api/users/123, changing the ID to124reveals another user's profile. Detecting this requires understanding the context that "123 is my ID, 124 is someone else's ID, and another user's profile should not be viewable"—simply changing parameters and sending requests is insufficient for determination - Privilege escalation: Calling an admin-only API endpoint (e.g.,

/api/admin/users) with a general user session succeeds due to missing authorization checks. Detecting this requires understanding the application's permission model that "this endpoint should be accessible only to administrators" - Restriction bypass: On an e-commerce site, the expected flow is cart → payment → order confirmation, but calling the order confirmation API directly starts the shipping process without completed payment. This is not a problem with individual requests but with the precondition checks of the processing flow

With Takumi's blackbox assessment, agents perform attacks with an understanding of the application's features and context, enabling them to discover such business logic vulnerabilities. For example, in IDOR detection, the agent first accesses a resource as a legitimate user, then replaces the ID with another user's and attempts access, comparing the response contents to determine whether unauthorized access succeeds.

Adaptation to the Environment

Traditional DAST typically sends predefined payloads sequentially to a preconfigured list of endpoints. As a result, testing can become ineffective in the following situations:

- Input format mismatch: After an application API format change, the scanner sends requests in the old format, which are rejected by the API

- Dynamic tokens: The scanner cannot correctly handle values that change per request, such as CSRF tokens and nonces, causing requests to be rejected by the server

With Takumi's blackbox assessment, the agent incrementally interprets the application's responses and dynamically adjusts attack methods. For example, the agent checks the appropriate request format beforehand for APIs, or autonomously retrieves CSRF token values from pages and includes them in requests when needed.

Multi-Step Attacks

Traditional DAST treats each request as an independent test case. That is, it is difficult to autonomously verify attack scenarios that span multiple steps, such as "send request A, then construct request B based on its response."

However, real-world penetration testing often requires multi-step attacks such as:

- Log in as User A and obtain the ID of Resource X → re-login as User B and attempt to access User A's Resource X (IDOR verification)

- Add an item to the cart, apply a coupon, and tamper with the price parameter just before sending the payment request (price manipulation verification)

- Upload an SVG file via the file upload feature, navigate to the page where it is displayed, and check whether the embedded JavaScript executes (Stored XSS verification)

With Takumi's blackbox assessment, the agent leverages its tools to autonomously construct and execute such multi-step attack chains. Since it understands the results of each step before assembling the next, it can reproduce the same attack flows as a human security engineer.

Execution Cost

Traditional DAST primarily processes payload list transmission and response pattern matching, keeping the computational cost per request low. However, since it exhaustively sends a large number of predefined payloads, the total number of requests can become enormous when there are many target endpoints or payload types.

Takumi has a higher computational cost per request because the agent incrementally interprets the application's responses while performing attacks. On the other hand, since the agent selectively tries only the attack methods it deems necessary, the total number of requests tends to be lower.

As such, the cost characteristics of the two approaches differ, and which one is more cost-effective depends on the scale and configuration of the target application.

Reproducibility

Traditional DAST operates deterministically. Given the same application and configuration, it always produces the same results no matter how many times it is executed.

Due to the probabilistic nature of LLMs, Takumi's assessment results for the same application may vary slightly between runs. A vulnerability discovered in one run may not be found in another run, or vice versa.